Guide Contents

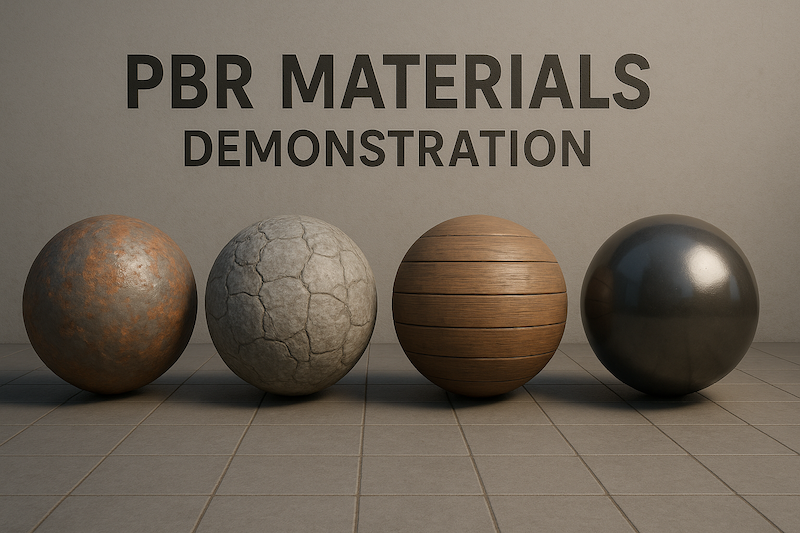

Physically Based Rendering

PBR materials simulate how light interacts with surfaces in the real world, creating incredibly realistic visual effects. In concert visuals, this enables stunning holographic performers, lifelike stage elements, and immersive environments.

- Real-time reflections and refractions that respond to stage lighting

- Material properties that mimic metals, glass, fabrics, and organic surfaces

- Environment mapping to reflect surrounding light sources

- AI-controlled material parameters that shift based on musical elements

- Neural network-driven texture generation for real-time dynamic surfaces

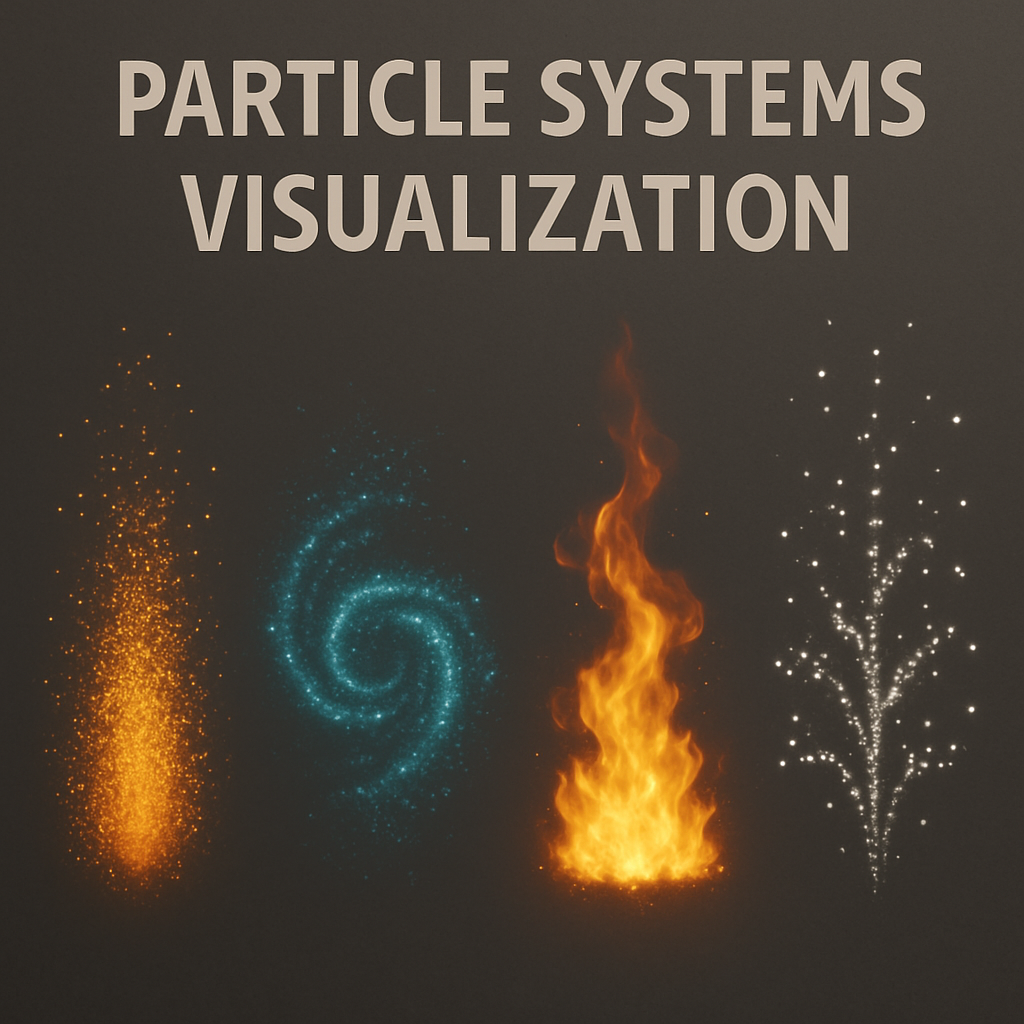

Advanced Particle Systems

Particle systems are the foundation of many stunning visual effects in concerts. AI-driven particle systems can respond intelligently to music, creating dynamic flows, explosions, and atmospheric effects that enhance the emotional impact of performances.

- Millions of particles with realistic physics and interactions

- Particle behavior that responds to beats, vocals, and musical intensity

- GPU-accelerated computation for smooth performance

- Emergent behaviors that create organic-looking forms and movement

- Machine learning models that predict and anticipate musical changes for coordinated visuals

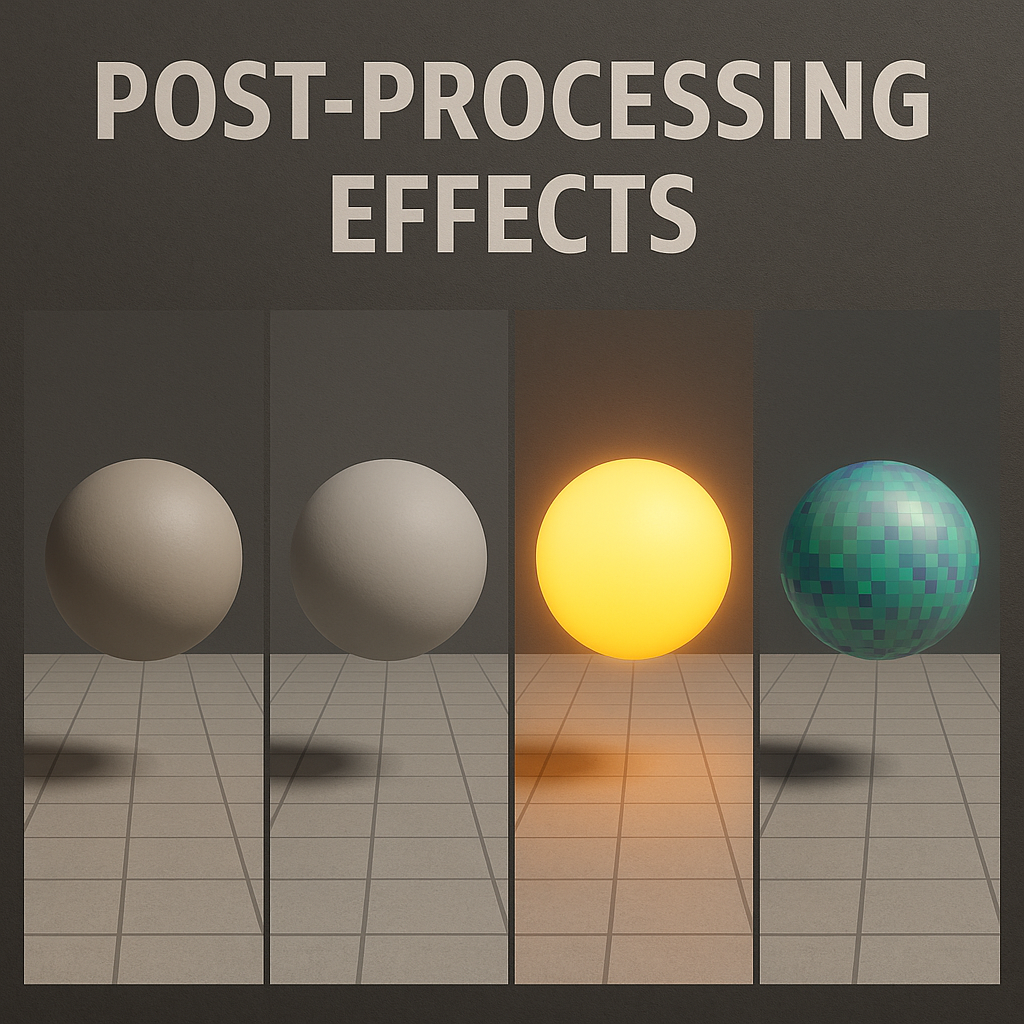

Post-Processing Effects

Post-processing effects transform raw 3D renders into cinematic experiences. These effects can be dynamically controlled by AI to match the mood and energy of music, creating visually stunning moments that amplify emotional impact.

- Bloom effects that create ethereal glows around bright elements

- Depth of field that focuses audience attention on key visual elements

- Motion blur that enhances the sense of movement and energy

- Color grading that shifts with the emotional tone of the performance

- Neural style transfer effects that apply AI-generated artistic styles to 3D visuals

Interactive Three.js Demonstration

Audio-Reactive Visualization

The true power of Three.js for concert visuals lies in its ability to respond to music. Experience how different frequency bands can drive visual elements in real-time.

Watch how the visualization responds to the music

AI & Three.js Integration

The integration of AI with Three.js opens up revolutionary possibilities for concert visuals. AI algorithms can analyze music in real-time, extracting features like beat, tempo, mood, and even semantic meaning to drive visual elements dynamically.

Key benefits of AI-driven Three.js visualizations:

- Music Understanding: AI detects complex musical patterns beyond simple beat detection

- Adaptive Rendering: Visuals automatically adjust complexity based on available GPU resources

- Semantic Visualization: AI can interpret lyrics and musical mood to generate thematically appropriate visuals

- Creative Amplification: Artists can define stylistic parameters while AI handles real-time execution

Platforms like Compeller.ai make these sophisticated capabilities accessible to creators without requiring deep technical expertise in programming or machine learning.